- Topologic is not commercial software. It is a research project that yielded a piece of open source and free software. This means I am happy for anyone to use it only if they find it useful.

I understand that. I have never been a pure researcher nor am I a pure practitioner either :-) We in India, have an interesting mythological story of Narasimha -- where the fragility of sharp boundaries are highlighted . (Here is an article I wrote on FB for my students about it https://www.facebook.com/sabufrancis/posts/10153842658865549) The real challenges are because of the boundaries we create in our knowledge. But then those challenges could get converted to opportunities too.

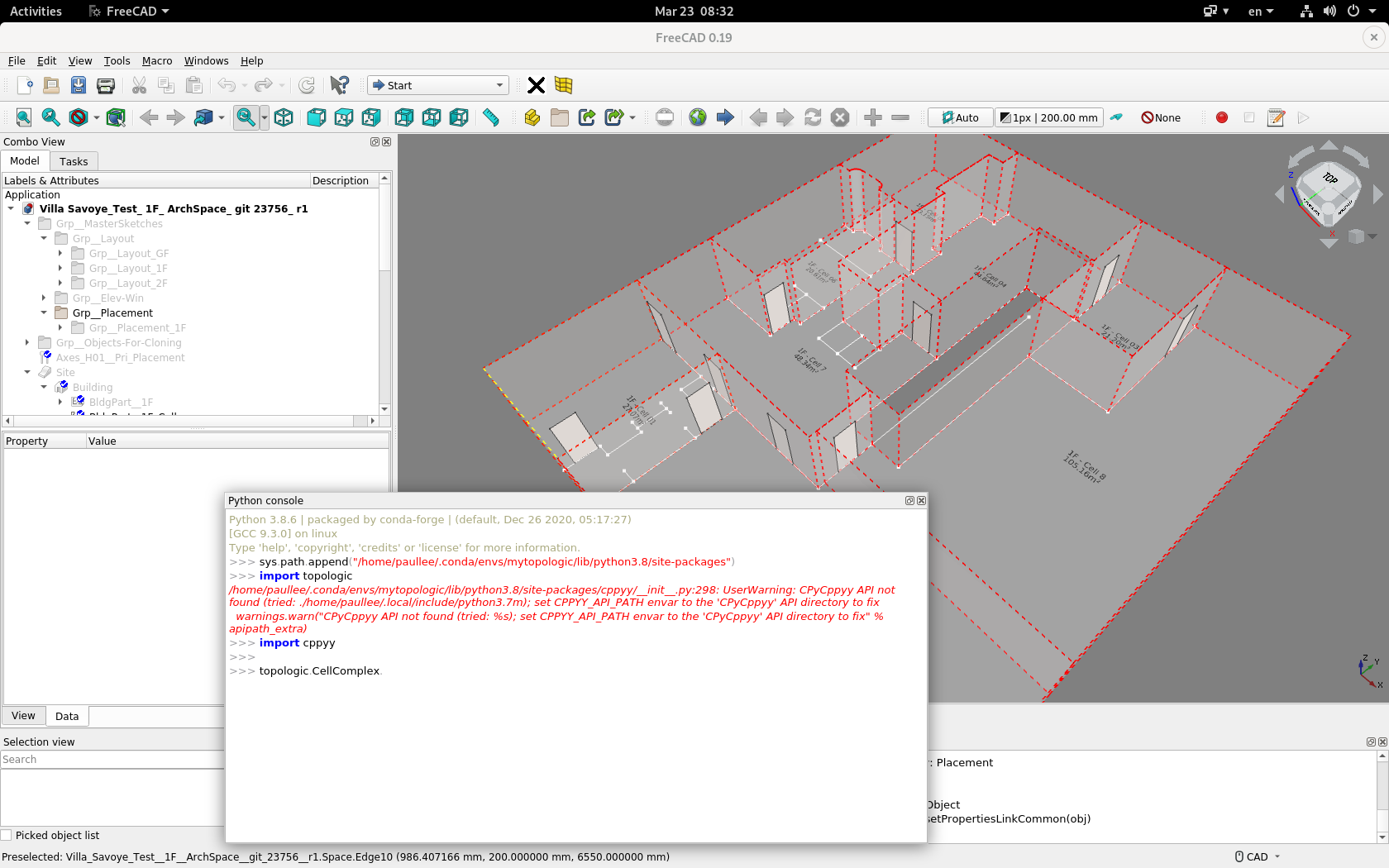

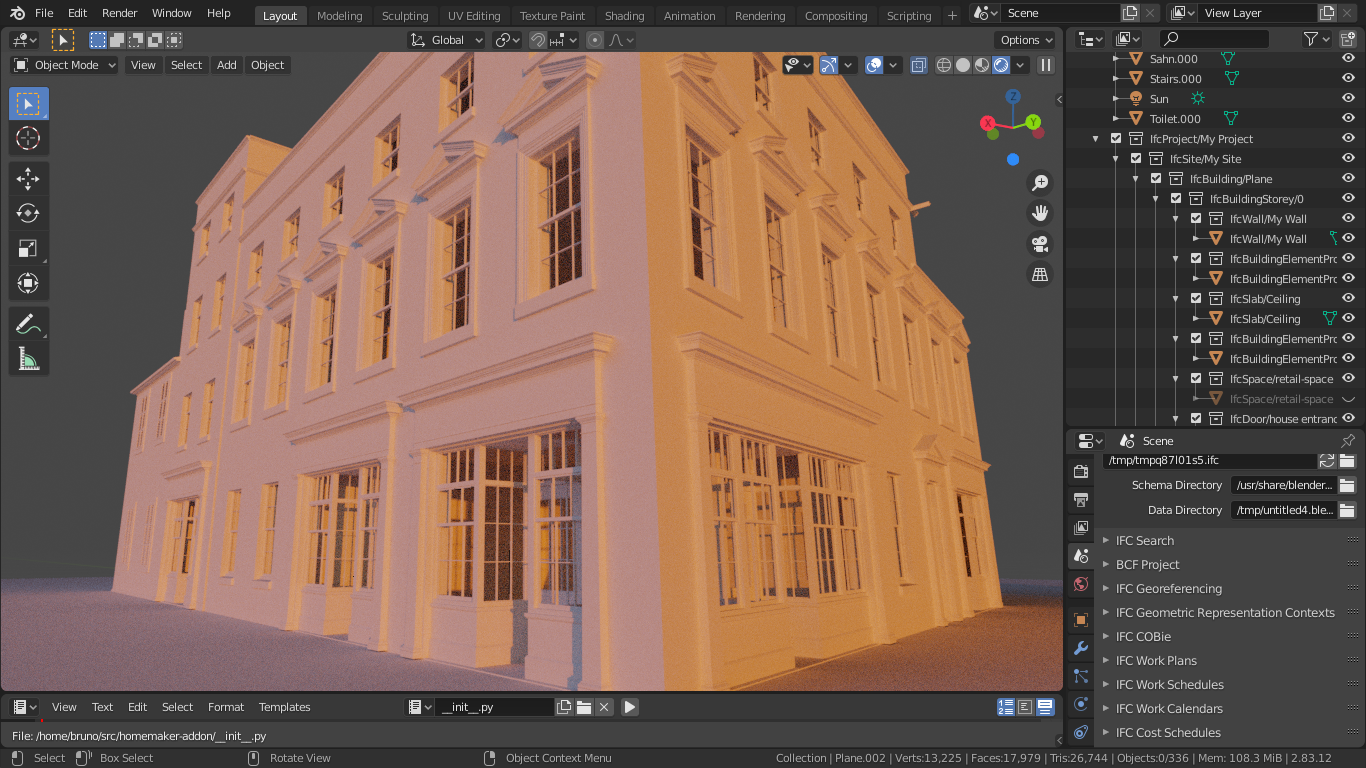

- Design as you said can start from various points. Again, if you find Topologic useful at your starting point or at any other point during your design process then that where it should be introduced. Topologic assumes that you want to use digital processes to represent your design and I think it is more suitable in the early phases, but others have used it in Revit/BIM analysis workflows.

I understand that position. I was merely pointing out to the pragmatic architect who may not be so empathetic to the internal theories of such efforts. (I will attempt to address this later below, for another point)

Now to answer some questions:

Topologic represents more than cells: Vertex, Edge, Wire, Face, Shell, CellComplex, Cluster, Graph, Aperture, Content, Context, and Dictionary.

Topologic uses Opencascade, exactly like FreeCAD, ifcOpenShell, and BlenderBIM add on for Blender. As such, I am confident it can handle complex shapes. I demo cuboids because they are easy to create and simpler to understand.

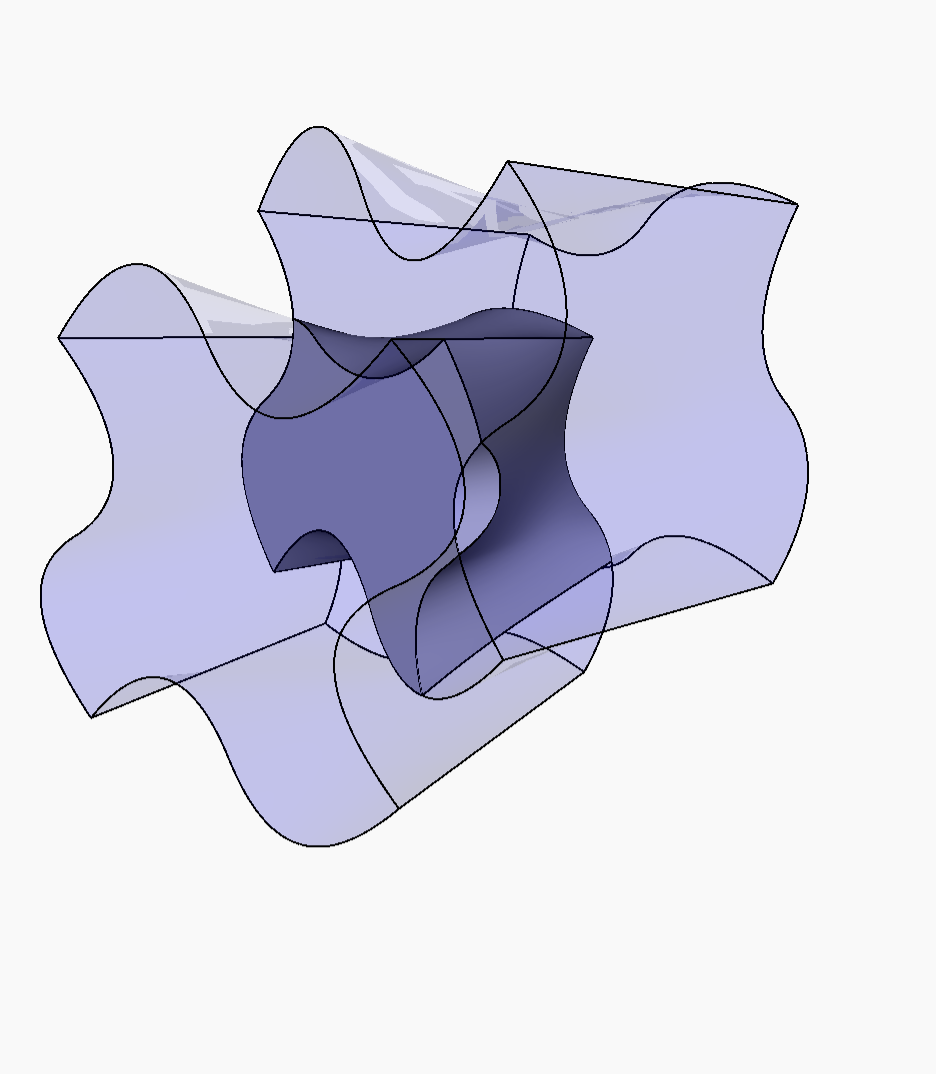

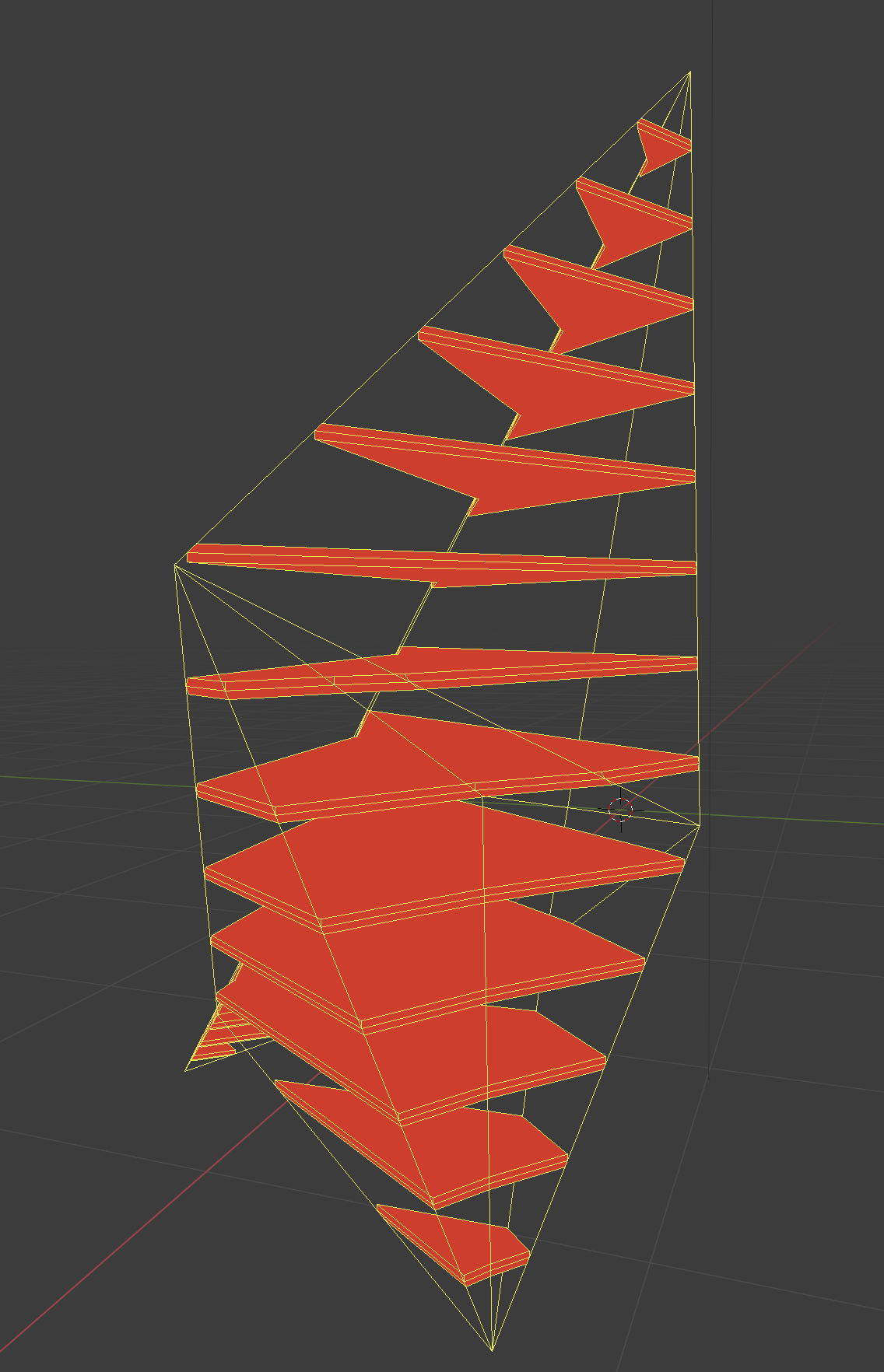

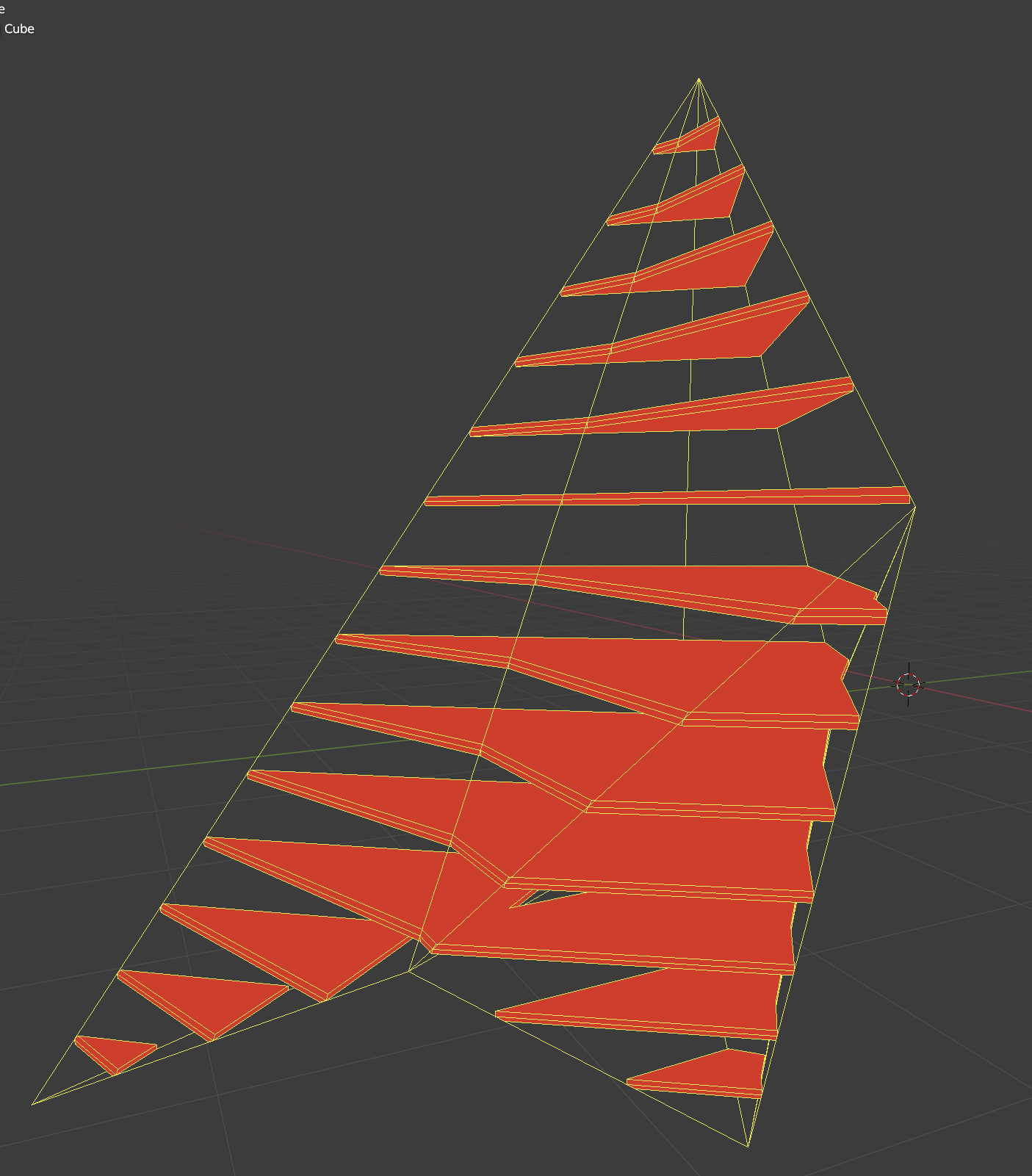

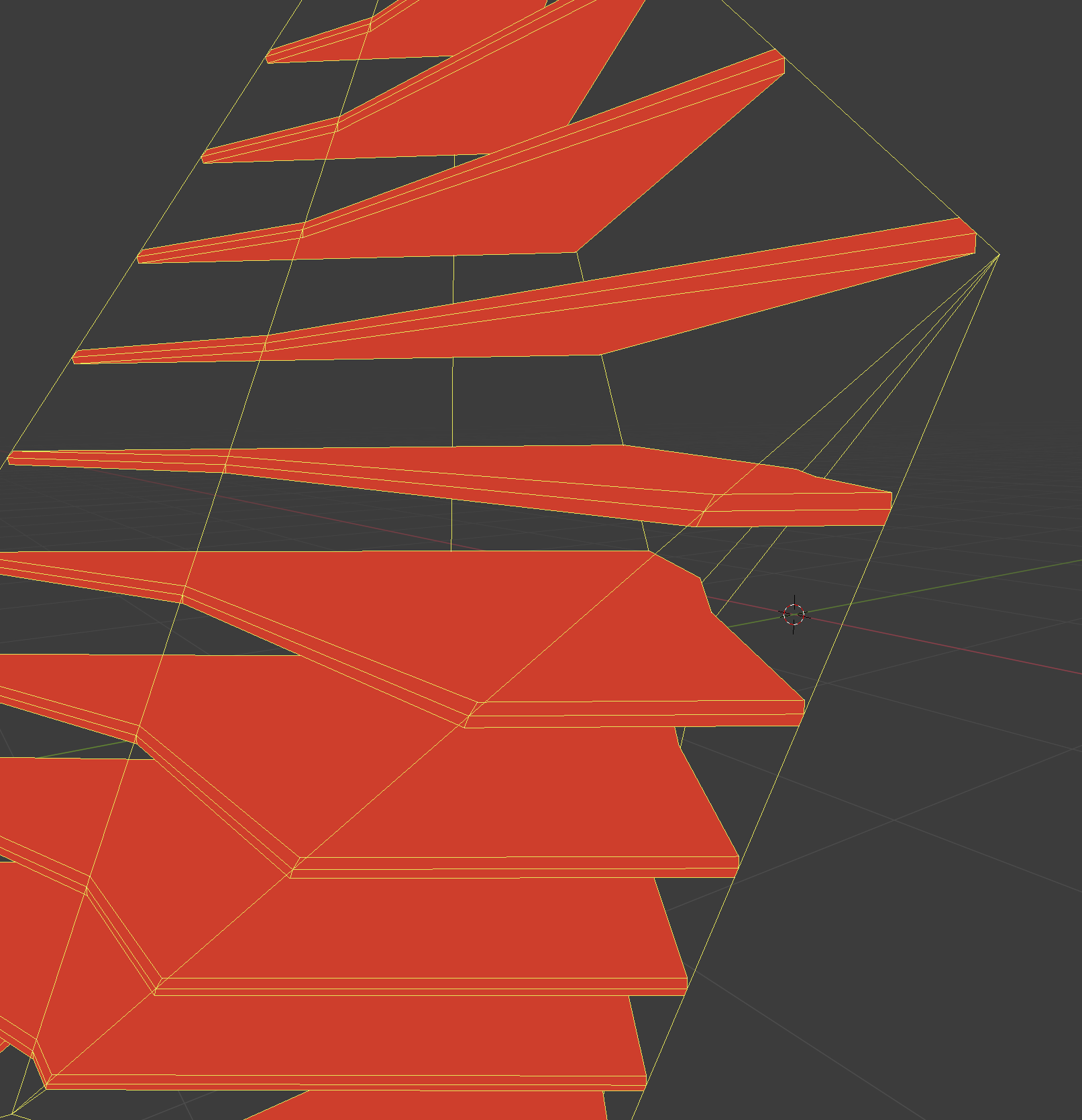

Topologic has no limitation regarding convex and concave shapes. It also has no limitation regarding planar surfaces. Objects are breps and can be quite complex and include NURBS surfaces.

I would love to take a look at any examples that do not use cuboids. Even if the innards are not explained that is fine -- just the eventual output of complex real world geometries, and their practical use would really be very nice

Your question regarding mathematical complexity is not something we addressed. We are not mathematicians so we did not have resources to carry out formal calculations. I would refer you to opencascade to see if they have any metrics

I was looking for information along this lines: https://carlalexander.ca/what-is-software-complexity/ (I am not sure if that is an authoritative article, but there are many of that nature) There does not seem to be much mathematical training for such calculations. If such calculations are attempted in this project, it would really help as it can give confidence on what else that could be explored using Topologic. As this is open source, I am sure this is addressable by someone or the other.

The dictionary class uses opencascade attribute system. So again, you can study that for any limitation. We simply rationalised it and did some interesting combinatorial work where Boolean operations affect and are affected by dictionaries.

I've been following opencascade (and other such projects) for many years. What I find (and I hope I am not making a strawman argument here) is that much of the graphics data structures arose from the fact that the original pioneers were facing really hard problems in terms of computer resources (memory, etc) Those limitations gave the overarching direction on how the math of vector graphics proceeded. Those directions are still with us

In fact not just the math but even operational strategies -- there is this fascinating video on "Computerphile" Youtube channel (and other places) where one of the pioneers of Unix ( Brian Kernighan) methodically explains the procedure of using "pipelines" : Different utilities are piped together to give a huge amount of power both in terms of computation as well as data handling capability in those early days of highly limited resources. It is fascinating how they zeroed in on the correct way to slice and dice the problems they were facing. They got the "Narasimha" thresholds correctly.

I am of the opinion that when it comes to architecture; we need to first lay out what really is the challenge here. I always try to see if I am forcing the designer to slice and dice the data in ways that cannot later on be joined back to get back the holistic

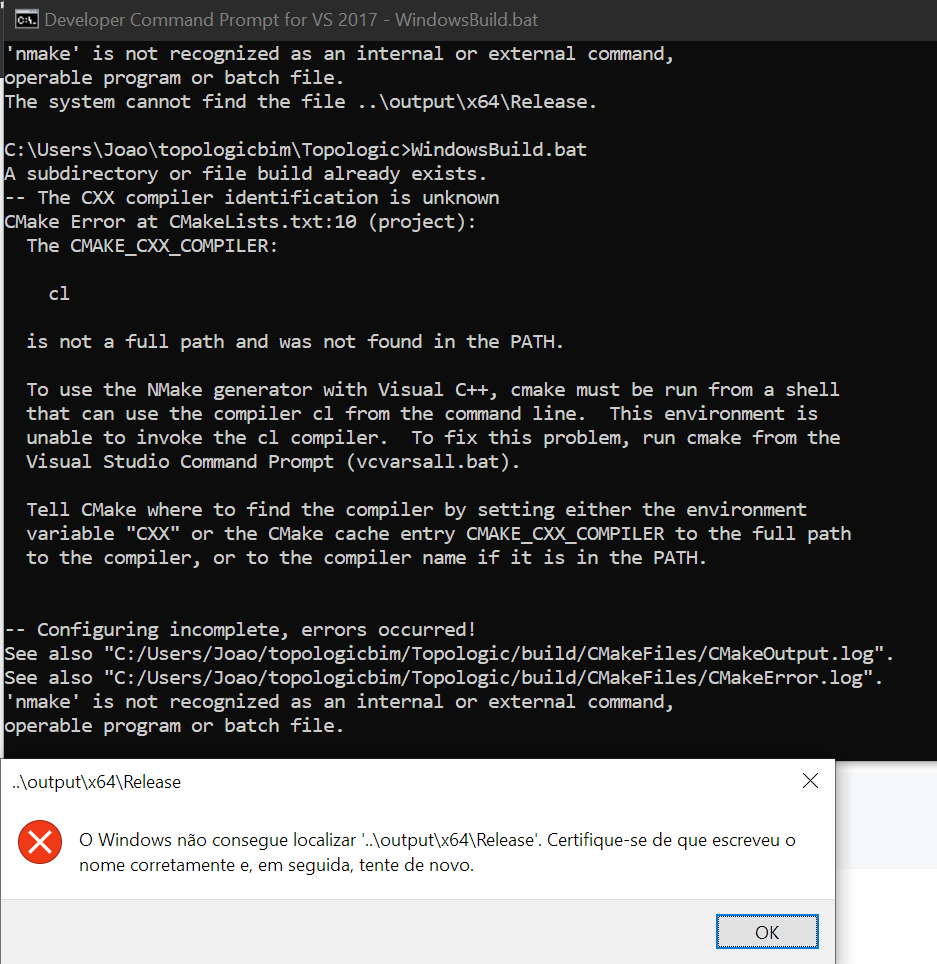

Obviously computer memory will always be a limiting factor, but it is so for Word and PowerPoint and AutoCAD. It is not a specific thing that limits Topologic. That said, there might be memory leaks due to bugs. We can never get it 100% perfect, but the source is available and anyone can suggest improvements and bug fixes.

I get what you say -- but I meant something more nuanced. Even if there is not enough RAM there are surely data handling strategies that allow efficient handling of extremely large data -- for e.g. the column based database used by Google to handle the big-data search problem. Obviously, there is no computer on earth that can take in the entire Google search space into RAM and then zero in into the search the user asked for. Yet the job is done.

In my hunt for data structures; I have found that a proper OOPs based approach and a fractal-esque approach are the only two ways where one can achieve efficiency in handling really large data. (I am of course biased: TAD uses both)

Computer software and digital representation will never imitate the greyness of sketching and manual design, but there comes a point where knowledge, information, topology, logic, and geometry need to be represented. That is when J believe Topologic can help.

I understand this -- and I always struggle with the question myself on where the boundaries are; and how to negotiate those boundaries. I always end up questioning all reductionist approaches. Architecture is a "giant synthesis machine" -- The architecture around us holistically provides the context for each instance of our experience. Basically things are quite stickily connected to each other and to capture that "sticky connections" adequately and "manageably" inside a representational model is important.

The reason I keep asking questions of such nature, and the need of preserving the holistic as much as possible is this: Today, much of the ideal pursuits in the modeling of the built environment (sustainability, carbon footprint, energy consumption, global warming, etc) are not getting done possibly because where the tyre hits the road (i.e. where the practical architect actually gets down to designing) he or she is facing a tug-of-war in many areas.

For e.g. If there is a modeling system that is good at doing some calculations on sustainability, the same system may go against some other goals that the architect wants -- maybe aesthetics, maybe acoustics ... maybe things that arose in that specific project just in time (such as the ones i faced in that mountain resort). I therefore felt that if all I thought was that let me handle at least part of the problem well -- then the question ends up "Who will define that part? Where should the model be cleaved?" And then I am again facing the same problem of multiple modeling methods all over. Instead, I used a different technique: Have a holsitic "fountainhead" of a model -- and from there let each goal get met.

As I said earlier; I am not really a pure theoretician and nor am I a pure whatever ... but I do feel it is important to keep this point alive in the minds of those who have the time, money and energy in delving deep into architecture modeling. (Kindly pardon my pedantry... been teaching for many years)